AI-powered Voice Analysis for Faster Mental Health Diagnosis

Transcarent

Project Overview

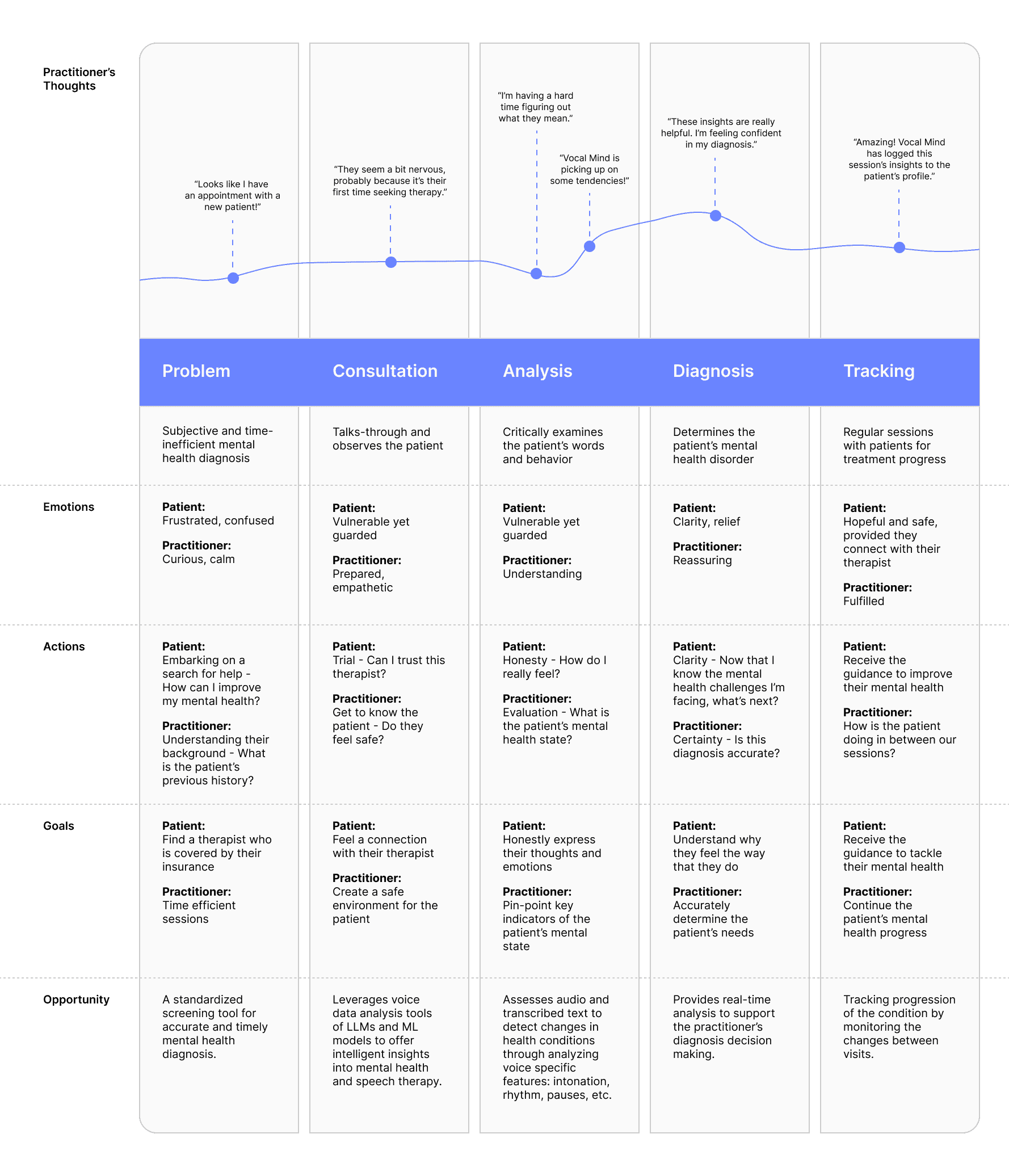

Getting a mental health diagnosis can take weeks or even years. The process is subjective for doctors and hard to articulate for patients, often leading to delayed treatment. Transcarent partnered with Cornell Tech to explore a tech-driven solution that fits into the clinical workflow.

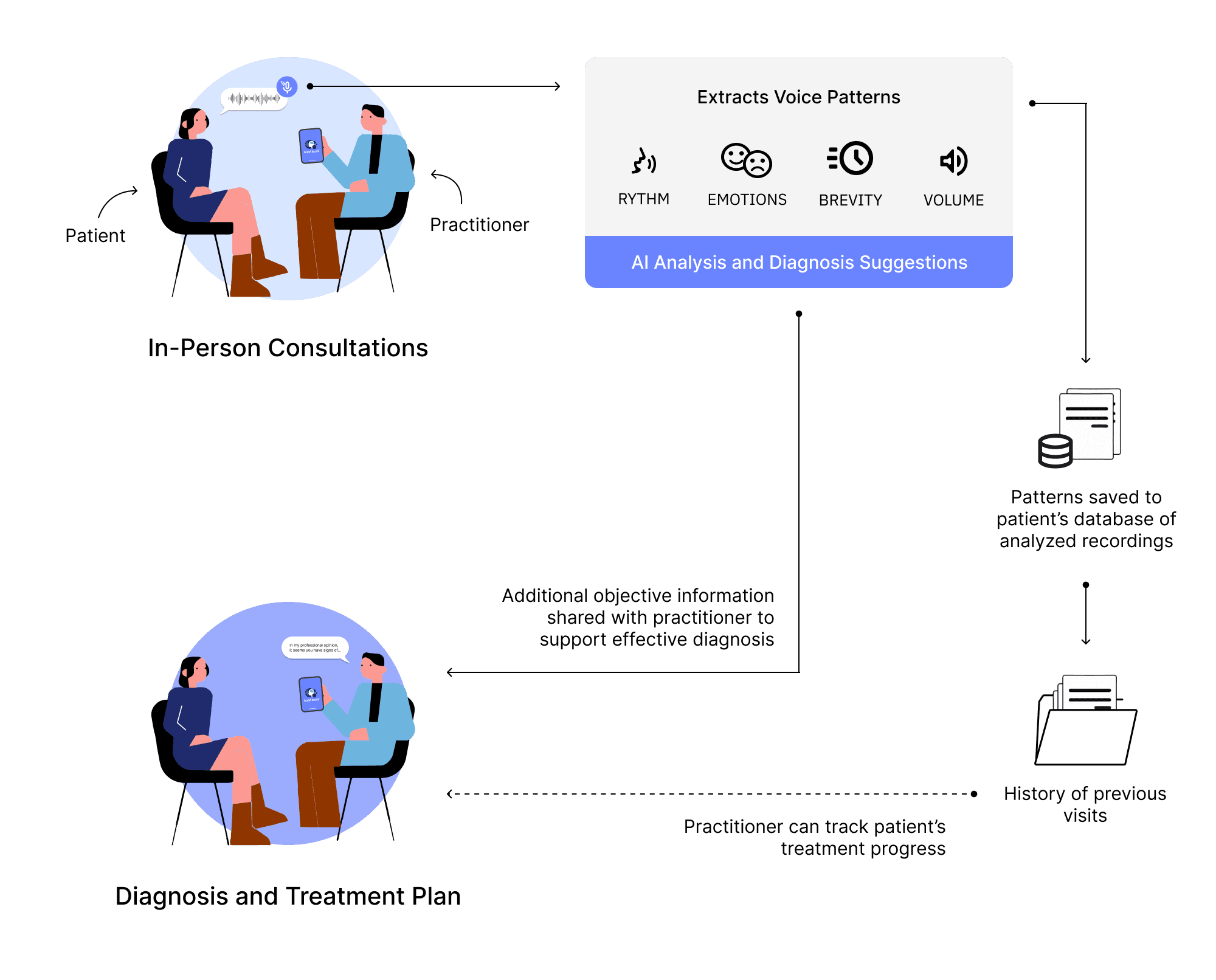

My team and I designed an AI-driven tool that analyzes voice patterns like tone, rhythm, and emotion during therapy sessions. It provides objective insights to support doctors’ decisions and creates session records to help track progress over time.

How Did I Add Value?

As the lead on user research, I collaborated with a cross-functional team and learned to integrate a speech behavior API. Our user-centered design earned us a spot as one of 9 finalist teams (out of 88) to present to Cornell Tech faculty, industry leaders, and VCs.

Skills

User Research

End-to-end Design

Team

Dobko and Nirmal, Developers

Wu, Product Manager

Rajabi, Company Advisor

Timeline

August - December 2024

Tools

Figma

The Problem

An accurate mental health diagnosis can take weeks or even years

This is partly because diagnoses vary from person to person; some might have one, while others have quite a few. Mental health symptoms can often affect self-care, life skills, and relationships, so it's essential for the patient to receive a diagnosis, which is the first step to treatment.

Why does this problem matter?

1 in 5

Adults in the U.S. experience mental health illness each year.

NAMI, 2024

50%

Of individuals started to have mental illness symptoms by 14.

NHI, 2024

75%

Of individuals experienced mental illness symptoms by 24.

NHI, 2024

The Design Challenge

Research & Interviews

How is tech currently integrated in healthcare?

Secondary Research

We researched the stakeholder ecosystem, current patient experience, and technological advancements to get a clear picture of the complex systems within the healthcare industry. We identified a trend towards integrating tech into diagnostics.

system mapping

User Interviews

I led the creation of our interview guides with 5 doctors, 5 patients, and 2 medical students. For doctors, we aimed to understand how tech is used in their practices and their feelings towards AI. For patients, we wanted to learn about their diagnosis and treatment experience.

“Providers are interested in generative AI, but the research is very new. AI is already used in diagnostics and pathology, classification tasks.”

Medical Student

at Weill Cornell Medicine

Key Insights

Lack of Standardized Diagnosis Tools

Variability in diagnostics criteria and limitations of subjective screening tools can lead to delayed or misdiagnosis.

Symptoms Can Change Over Time

A diagnosis may need to be revised as symptoms evolve to ensure appropriate treatment.

Struggle to Articulate Emotions

Patients may face difficulties articulating or expressing their emotions, which slows or prevents timely diagnosis.

Development

Integrating speech pathology into mental health diagnosis

Mental Health Symptoms Show Up In The Voice

Mental health symptoms often show up in the voice before they’re consciously recognized. For example, depression may present as slowed speech or flat intonation, while anxiety might manifest as rushed or jittery speech

Objective Data for Timely Diagnosis

Subtle voice changes can serve as early indicators, allowing for timely intervention before symptoms worsen.

The Solution

user journey

Testing

Does it Work? Well, we tested it!

I collaborated with engineers and a product manager to identify and address the most uncertain and high-impact assumptions early on in the process. We were able to prioritize critical features and reduce the risk of building something that doesn’t resonate with users or meet technical requirements, increasing the chances of its success.

Focus Area

AI for Speech and Tone Analysis

Tech Usability

Assumptions

Mental Health Affects Speech Patterns

People Speak Normally When Recorded

Assumption 01:

Mental Health Affects Speech Patterns

Ten people were enlisted to complete a week-long experiment in which they filled out a mood tracker and sent audio recordings about their day. Our questionnaire was modeled on the clinical guidelines for mental health diagnosis. We interpreted the recordings using an existing speech behavior analysis API by Humane AI.

Clinical Guideline

Our Questionnaire

Participant's Mood Tracker

API's Voice Sentiment Analysis

Day 1

“Confident and focused”

Day 2

“Stressed and focused”

Day 3

“Felt confident”

Key Takeaway

Assumption 02:

People Speak Normally When Recorded

We compared the answers and mannerisms of interviewees while initially speaking unrecorded and after we began recording the conversation. The participants were randomly selected, unaware of the purpose of the research, and did not know ahead of time that they would be asked to be recorded halfway.

80% had no significant voice change

We inferred the changes in speech patterns were minimal and wouldn't drastically affect the accuracy of the analysis.

75% took longer pauses as the conversation continued.

We took these pauses as an indicator that participants felt more comfortable the more they spoke with us.

Key Takeaway

Reflection

What did I learn?

I thoroughly loved collaborating with my team as each of our unique perspectives was valued throughout the development process. We were able to thoughtfully consider the user's needs, the tech needed to make our solution feasible, and the roadmap for our product launch. This genuine involvement and curiosity led to a meaningful solution, and we were selected as 1 of 9 teams from a cohort of 80 groups to pitch to Cornell Tech faculty and partners.

A big thank you to my amazing teammates and advisor!

My Other Works

Designed with love. All rights reserved.